- Set the Scope to a specific form (unless there is a really good reason to make it Entity scope)

- Make it affect only one field on a specific form

- Give it a name using the one form / field it is tied to

- Give it a description that explains WHY it is necessary, not a description of what it does

- Include the inverse action (ex: lock AND unlock) after the condition

eccosystem

Monday, September 16, 2024

Power Platform (MS CRM/CE) Business Rule Best Practices

Wednesday, August 21, 2024

PowerAutomate Flows Trigger Multiple Times - a proposed workaround

Every now and then, I get a PowerAutomate Flow that seems to trigger more than once, and most of the time it is not a problem, but sometimes they are running in parallel and end up creating duplicate records which is undesirable. It happens most often when a Dataverse trigger Change Type is "Added or Modified". I suspect that something in the API is updating the record right after creating it.

- When the flow starts, initialize a string variable called "Token" with a "guid()" expression

- Update the triggering record's Token Gate field using the Token from step #1

- Delay a few seconds (or up to 1 minute) to allow any parallel flows to complete step #2

- Get the trigger record (the one you updated in step #2)

- If the token generated in step #1 is not equal to the token received in step #4, then terminate the flow.

Sunday, April 7, 2019

Making PowerBI Easier

I recently learned about a cool tool in XRMToolbox called Power Query M Builder (PQMB) by Mohamamed Rasheed (ITLec) and Ulrick “CRM Chart Guy” Carlsson (eLogic LLC) and I love the tool. It lets you generate Power Query code using Dynamics 365 views as your starting point, then you can tweek them using FetchXML Builder to get more complex queries, and then generate a full query that produces the field names using the labels from your CRM metadata. This process is much faster than starting from scratch using the Power Query tool to build up a query to find the right data, plus, it has the added advantage that you can easily change the connection URL for all the queries from a single place, which is really helpful if you have a solution you move from dev to production.

I left a mesage on Ulrick’s blog that there is one improvement I would like to see. I would like to have the ability to save/recover views more easily. For example, I have a report that I know needs several different CRM views or custom FetchXML queries. After I have created all the queries in Power Query and then start to relate them together in PBI, then I find that I am missing some columns, so I need to start from scratch on one or more of my views in PQMB.

To save some time, I started saving all my FetchXML queries embedded in comments at the end of the Power Query Advanced Editor. Here is what I do:

While in PQMB, I can open the FetchXML builder to modify a query

then copy it to the clipboard and paste it into the end of the Power Query using /* comment */ .

Now if I had to revise my query at a later date, then I can quickly get back to what I had without having to recreate a complex query from scratch.

Saturday, April 6, 2019

How to I hide App navigation in Dynamics 365 CE?

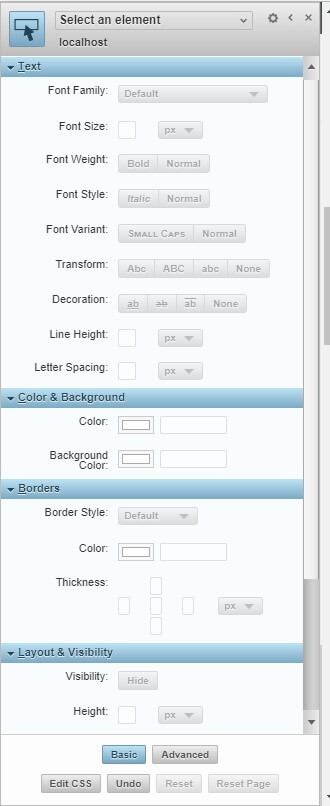

My favorite secret weapon is a Chrome extension called Stylebot. I can change navigation, colors, and even compress whitespace!

After you install it, refresh your screen and open it using the CSS icon in Chrome. You can click on the element you want to change (or hide), and it has friendly buttons to make quick changes. It will save the style changes using the URL of the site. You can export and import the style mods and share them with other users.

Wednesday, February 27, 2019

What is the solution version number used for?

A recent thread of discussion on the CRMUG forums about this topic got me thinking – here is my take on the subject.

For the smaller clients with no integration, I think there is value in using the current date in the solution version number so when you export it so that you can easily keep track of which file in your downloads folder is the right one. A quick acknowledgement to @Gus Gonzalez's for making that suggestion. End users can easily comprehend it and it adds value to them. A solution file can have literally anything in it and the (typical) version number does nothing to help you with figuring out what is major/minor/patch or resolving dependencies. By using Gus's technique, the date of the solution is built into the exported file name. By using Gus's technique, you avoid having multiple copies of a file called "SolutionName_1_0_0_0.zip" and trying to figure out which one is relevant. I would argue that for the people creating solutions for small(er) organizations, it is not easy to make the distinction of major vs minor. Can anyone here define how many fields on a form must move before you cross the threshold from a patch to a minor release, or how many business rules must be changed before it becomes a major release?

But for a company that has a staff of developers that depend on integration with other systems and have governance policies, we should consider the benefits to the version number with a traditional approach. For many of my past projects, I have clearly agreed with @Ben Bartle's position because I come at this as a developer. The reason has to do with traditional software development practices: When writing applications that have dependencies on a multitude of libraries (.DLL's), then keeping track of the version number helps you identify whether an update to a library will significantly impact your application. With a DLL it is easy to define what is major, minor or a patch based on best practices.

For a consultant that is configuring systems for many clients, I would recommend that you have the name of the client for the solution name, and put the D365 version in the first two decimal positions of the version number, and date in YYMM.DD format in the last two positions. This makes it easy to tell which version of D365 you exported the solution from (which could be significant if the development area is not in sync with staging and production) and the date it was exported.

To bring this point back into context of this discussion, when you modify a D365 solution and then promote it to production, you are actually updating the API endpoints. Consider what happens when you alter the behavior of workflows, deprecate entities/fields, or make the field length shorter. When you promote that solution into production, you run the risk that integration code will break.

Personally, I have been putting the date in the solution's Information/Description field. While this is not as pretty as using the version number as a proxy for a date, it serves it purpose because you can see the solution description gets updated in the production system. The only downside with that approach is that you have to either import the solution file to see the description containing the date, or rip apart the zipped solution file and read the XML files.

With respect to keeping track of whether the solution file contains a major/minor/patch to a system, that is something everyone should be documenting anyway regardless of whether you go with Gus or Ben's recommendation. By keeping a log of changes and/or implementing a full version control system between development/staging/production, you must be communicating your changes to your testing staff or your end users, no matter how small the change is. If users detect a change that you were not in control of, they might think the system is unstable, and they will lose confidence in your system.

Friday, February 22, 2019

Dashboard Options in Customer Engagement

The Dashboard could take many forms. I have seen some very creative ideas using roll-up calculations in Goals to generate the data, and then use a View+chart to display your results. SSRS is another possibility, but it suffers the same problem that Goals have in that it has limitations on the number of records it can work with.

There is a free add-on to Excel called Power Query which you might find useful because it lets Excel query data from Customer Engagement (CE), and then your dashboard is a tab that represents the data with charts or tables. My favorite dashboard tool these days is Power BI because it lets user interact with the data, as a developer you have more control over the results, and fewer restrictions on the number of records you can work with. It also allows you to embed Power BI into a CE dashboard so it appears that you never leave CE.

If you want to do forecasting, the Probability field on the Opportunity is used as a multiplier against the Estimated Revenue, and when used in the aggregate, is called the Expected Value. It commonly used to forecast revenue for a sales pipeline report.

If you are a fan of predictive models, you could use the current years sales, adjustments for seasonality, and current growth curve to predict future sales. I just finished taking a masters class on this subject and would be interested in helping you out.

Sunday, February 10, 2019

Why is D365 running so slow?

- Synchronous workflows and plugins - You could test this (in a sandbox) by deactivating them and see if there is a difference in performance.

- Check your Settings/System Jobs to see if you have a lot of activity in there (especially failures). If you have years worth of system job data, I highly recommend you set up scheduled Bulk Record Deletion jobs.

- Data duplication rules – how many published rules do you have? Turn them off and see if there is a difference.

- Browser plugins (including antivirus)

- Browser cache – depends on the browser, but highly recommend clearing your cache daily because MS is updating the form rendering engine all the time.

- Is Auditing turned on? It could play a small part in your overall performance.

- External apps, such as PowerApps, Flows, ClickDimensions that might add load to your database. If you set up an Azure application in a different region from your CRM instance, this could impact system performance (and unnecessary cost).

- Network throughput (Bandwidth) In a recent Gartner survey, 22% of IT leaders identified networking problems as the root cause for performance issues with Office 365. I have customers that have researched their connection speed between different MS data centers and have had unexpected results. Just because you are close to the data center does not mean it will be faster.

- Time of day – Sometimes I can see a difference in performance depending on the time of day, which is likely to be related to my upstream network throughput or the data center where my D365 instance is located. The only way I could test this would be to create a dedicated data circuit between my office and my data center.

- JavaScript embedded in your form that make a lot of API calls. Use the browsers built-in performance tool to see what is happening.

- Asynchronous workflows running will add workload to your database. For example, If you have imported 100k records which might kick off 200k async workflows, then users might expect to see slow performance while the workflows are hitting your system.

- Relevance Search and Text Analytics – crawls your site to index your data. This is adding load to your system.

- Legacy form rendering – you could try to experiment with this System Setting, but be warned – MS says that legacy form rendering will be turned off.

- Server Side Sync – do you have a lot of email coming in? Are new contacts created?

- Number of rollup queries – you would see how much activity is going on in the System Jobs Log (mentioned above). This would also include things like Goals.

- Cascading relationships – Look at each 1:N relationship and see what the “down-stream” effect is of your updates.