How many times have you been creating a view, and found that a column you need is on a related table two or three relations "up" the parent relationship chain? Now you can get those column into your view easily using a PowerFx formula. Start by creating a column in a table and selecting a "Formula" data type.

For example, lets say you are designing a view of opportunities, and you want to see the territory name of the manager of the sales person that owns an opportunity. You have these tables:

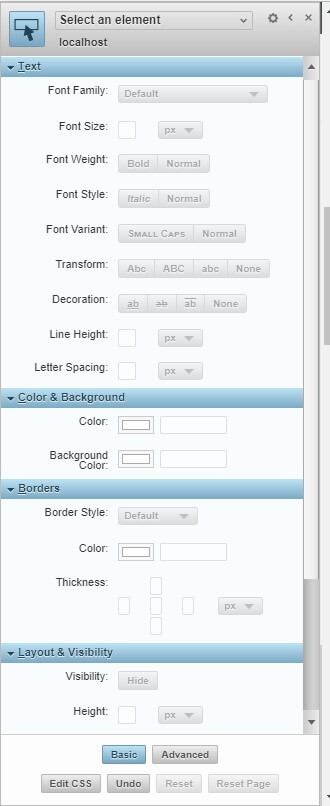

A new "Formula" field will appear; type the name of a lookup column from your current table, and it will prompt you with the column names from that related table. Put a period at the end of the column and it will prompt you with all the columns (and other parental lookups) from that table. You can continue using the 'dot' notation to link up multiple levels in the relationships as necessary to get the column you want. Here is how my example looks when adding a field to the opportunity table:

The data type and format depend on the data type of the field you select.

There are some limitations, such as with currency. For those cases, you need to use the Value() function to change the field into a Decimal data type before you can use it. At that point, you can choose formatting under the Advanced Options:

I now prefer to use the PowerFx over the classic calculated field. I have found that when I promote a solution as managed, then the classic calculated field's "edit" icon is inactive and will not let me see the underlying formula.

I have learned through experimentation that the PowerFx formulas don't do a good job with date/time fields when used on email templates; no matter what type of date/time formatting I have tried, it will always show the time. If you find a way to make PowerFx work on templates, I would like to hear back!

You can read more about these formulas in the MS documentation: Work with Dataverse formula columns - Power Apps | Microsoft Learn